The rise of autonomous agents based on Artificial Intelligence (AI) is reshaping how businesses, governments, and individuals interact with technological systems.

Amid this progress, the debate about Governance and ethics in AI agents This becomes essential to ensure responsible, safe use aligned with human values.

This article explores the fundamentals and best practices for creating a trustworthy and transparent AI ecosystem.

What are AI agents and why do they require specific governance?

Unlike static or predictive models, AI agents They have the ability to observe their environment, make autonomous decisions, and perform actions with minimal or no human intervention.

This characteristic creates new ethical and operational challenges, as it involves a high degree of autonomy, a dynamic context, and continuous learning.

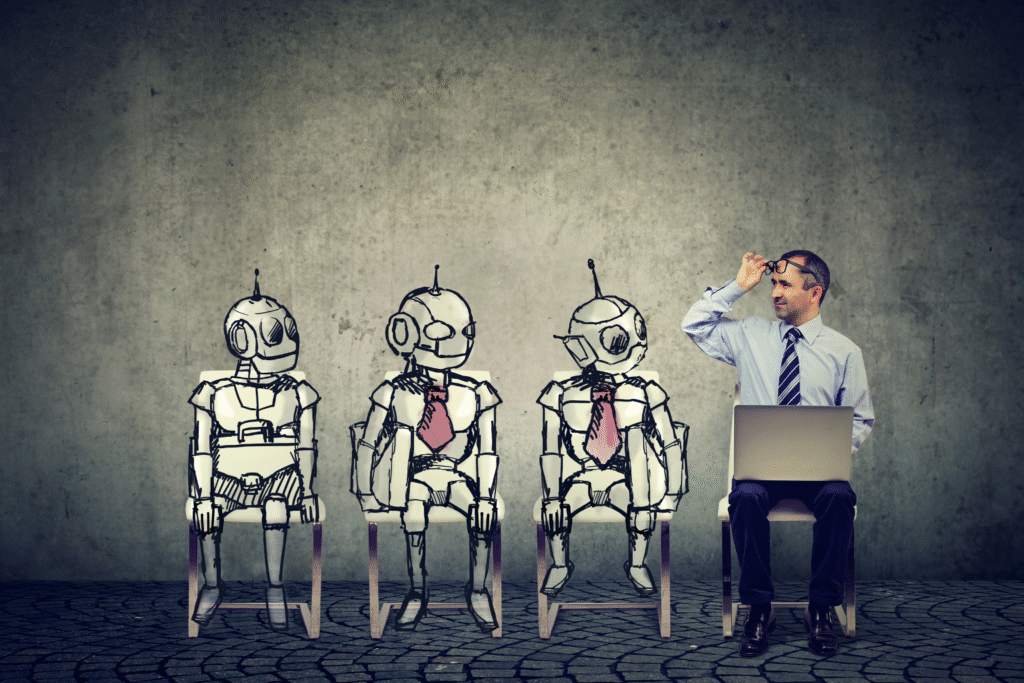

When an agent makes a mistake, such as assigning an incorrect diagnosis or making an improper bank transfer, the inevitable question arises: Who takes responsibility?

The answer requires a structure of AI governance Robust and multidisciplinary, based on accountability, transparency, and regulatory alignment.

If you want to work professionally and responsibly in this AI ecosystem, learn more about... Agent and Automation Manager Training with AI From NoCode StartUp — a practical and strategic path for those who want to lead ethically and efficiently.

Fundamental principles of ethics in intelligent agents

The development of intelligent agents must be anchored in values such as beneficence, justice, non-maleficence, and autonomy.

These principles are present in international frameworks such as OECD AI Principles and also in EU AI Act, pioneering European Union legislation for risk and liability classification.

The principle of explainability (Explainable AI) It is one of the most important. It ensures that decisions made by an agent can be understood, audited, and justified by humans.

This is critical in sectors such as health, finance, and education, where opacity can lead to irreversible damage.

Strategies for implementing effective AI governance

Creating a governance structure is not limited to documenting guidelines. It involves operational practices such as creating a AI ethics committee, Regular training, technical audits, and a clear definition of responsibilities.

According to Electric Mind, It is essential to incorporate iterative and collaborative processes, aligning teams from technology, legal, product, and regulatory areas.

Tools like IBM's AI Explainability 360 and the resources of Azure Responsible AI Dashboard They help in monitoring performance, bias, and ethical alignment.

For those who want to delve deeper into the market-leading tools, the AI NoCode Training It offers practical mastery of the technologies and frameworks required by new regulatory demands.

Common risks in environments with autonomous agents.

Among the main risks associated with AI agents are... algorithmic bias, The lack of human supervision and the Shadow AI — when employees use unauthorized tools for automation.

In corporate environments, these risks can compromise everything from compliance with the LGPD even institutional reputation.

The absence of containment and audit mechanisms can result in financial losses, data leaks, and discriminatory decisions.

Therefore, governance needs to include contingency plans and continuous updating of models.

Real-world use cases and best practices adopted.

Companies like Microsoft and AWS have been leading the way in AI governance best practices, especially in high-impact sectors.

Microsoft: Responsible AI in cloud services

Microsoft implemented its AI governance framework in Azure services, including cognitive agents used by hospitals and financial institutions.

The company makes impact reports publicly available, promoting transparency and accountability on a global scale.

Amazon: Auditable Logistics Agents with AWS

THE Amazon uses AI agents. In automating its global distribution centers, the company incorporated audit trails and models trained with algorithmic fairness principles using AWS AI Services.

Unilever: Ethical AI for talent analysis

Unilever adopted AI agents for automated analysis of video interviews during the recruitment process.

The system was developed with a focus on zero bias and has undergone independent audits to ensure fairness.

United Kingdom: National AI governance with a public focus

The British government, through Center for Data Ethics and Innovation (CDEI) created ethical guidelines for AI agents applied in public services, such as social assistance and health.

The initiative emphasizes explainability and continuous monitoring.

Tools and resources for AI agent governance

In addition to those already mentioned, other relevant tools include:

These features enable everything from tracking changes in models to generating reports for auditing and compliance, which helps to increase the reliability of the AI ecosystem.

Future trends and emerging legislation

The future of governance and ethics in AI agents depends on integration with regulations such as the AI Act and the creation of automatic alignment systems between human values and the objectives of the agents.

Researchers at Arion Research highlight the emergence of models of distributed accountability, in which multiple actors assume distinct responsibilities within the agent's life cycle.

The application of explainability and fairness techniques, combined with robustness testing, will be increasingly required in sectors such as defense, health, and education.

The trend is for regulatory bodies to require periodic reports on ethical performance and social impact.

Pathways to a trustworthy and human AI ecosystem

Building an ecosystem based on Governance and ethics in AI agents It requires more than sophisticated technologies: it demands institutional commitment, continuous education, and intelligent regulations.

Organizations that get ahead with solid structures, monitoring tools, and a culture of accountability are more likely to innovate safely.

If you want to prepare professionally to strategically apply governance, ethics, and AI automation, access the [link/resource]. Agent and Automation Manager Training with AI and learn in practice how to lead projects with responsibility and technical expertise.