Have you noticed that the most effective ads today don't look like ads at all? Most of the videos that actually convert on social media have a simple and natural format, as if they were made by real people.

This is what we call UGC content, or User-Generated Content.

The problem is that producing UGC at scale is expensive and time-consuming. That's when we decided to test a new concept: using AI (Artificial Intelligence) to automatically create UGC videos.

These videos you're about to see were generated from a simple image and a product description, without a camera, without actors, and without editing. Automation does it all.

Contents

What is UGC and why does it convert so much?

The reason why UGC has become the new standard in digital marketing is simple: ultimately, audiences trust people, not brands.

Smart brands understood this and started swapping expensive studios for short, spontaneous videos created using UGC.

As I mentioned, the challenge is that producing this content at scale is expensive and time-consuming. That's where automation comes in.

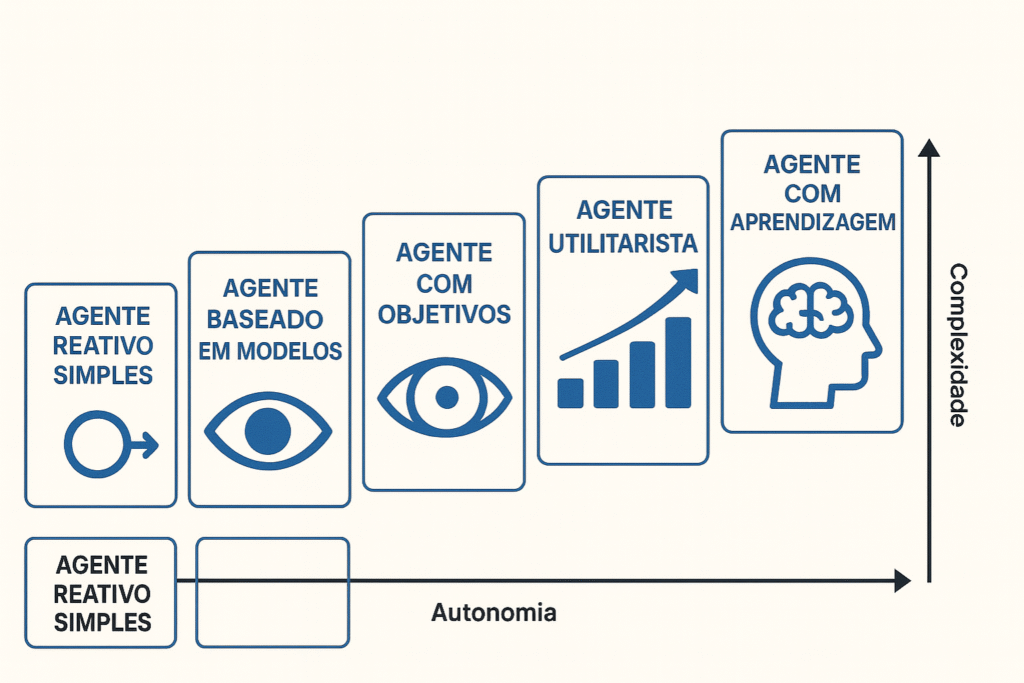

Process automation: overview

We tested a concept for using AI to automatically create these UGC videos. The process analyzes the image we send, creates the scene, and delivers the finished video in minutes.

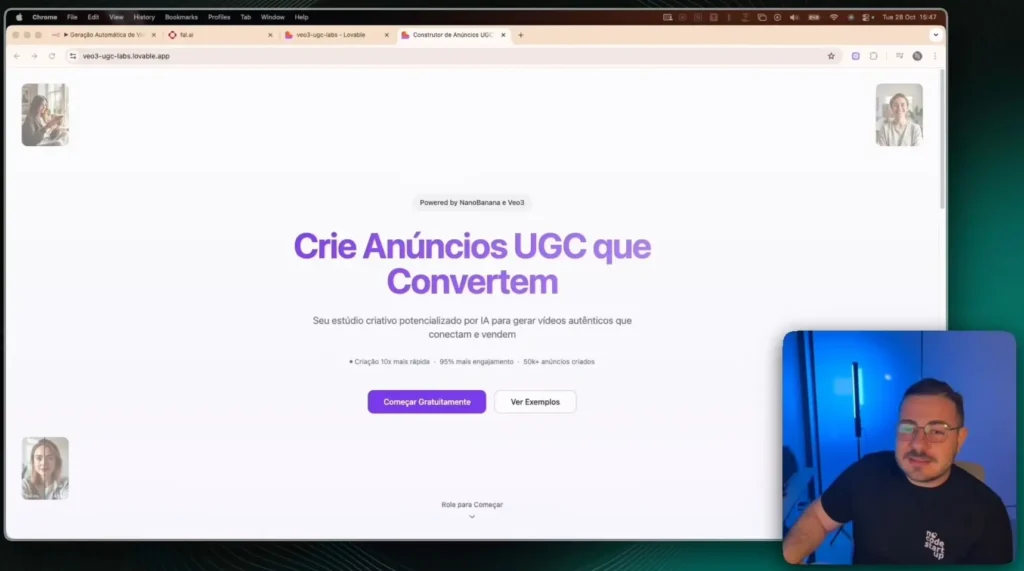

The user simply accesses an app, uploads an image of the product (or the person they want in the photo), writes a brief description of the ad, and enters their email address.

Soon after, the UGC video arrives via email, ready to be used in ads, Reels, or wherever you want.

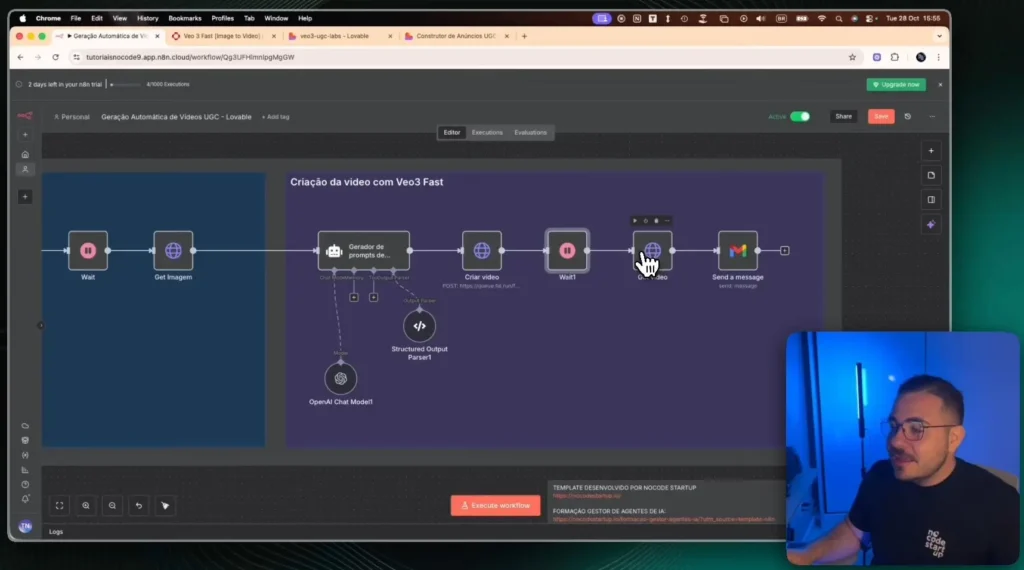

Practical workflow in Lovable and N8N

To make this work, we use some connected tools.

The user accesses a website that we created in Lovable, which functions as our interface (front-end). When the user clicks on "Create ad," this triggers a webhook for the N8N, which is the brain of all automation.

In N8N, the workflow begins by receiving the data: the image, the description, and the email. The first step is to save this image to Google Drive so that we can have a public link to it.

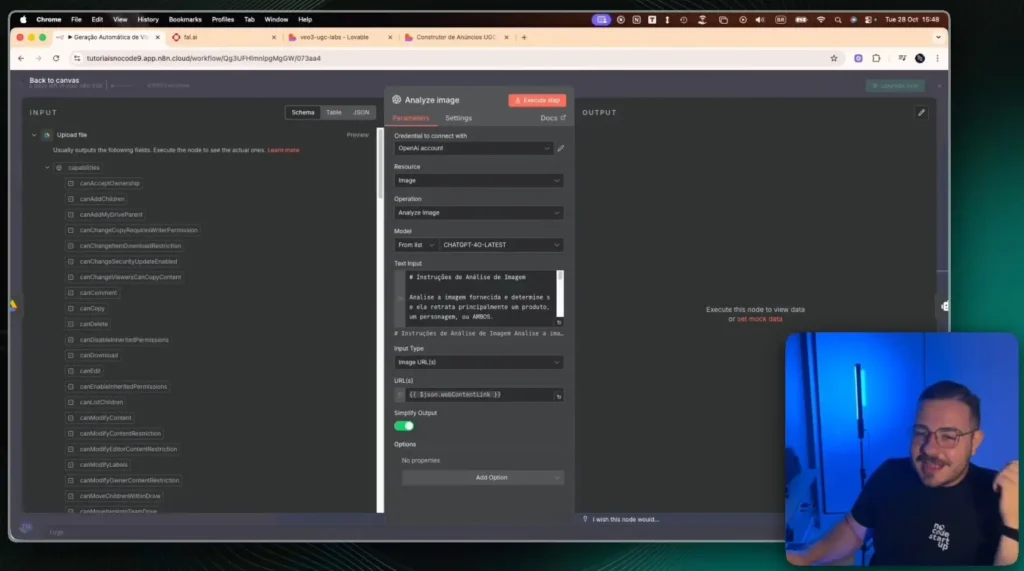

This link is then sent to an AI agent from OpenAI. This agent analyzes the image and creates a YAML file for us, which is basically a detailed technical description of what it "saw" in the image.

AI-powered image and video generation

With the reference image analysis (the YAML) and the user description in hand, N8N begins the creative phase.

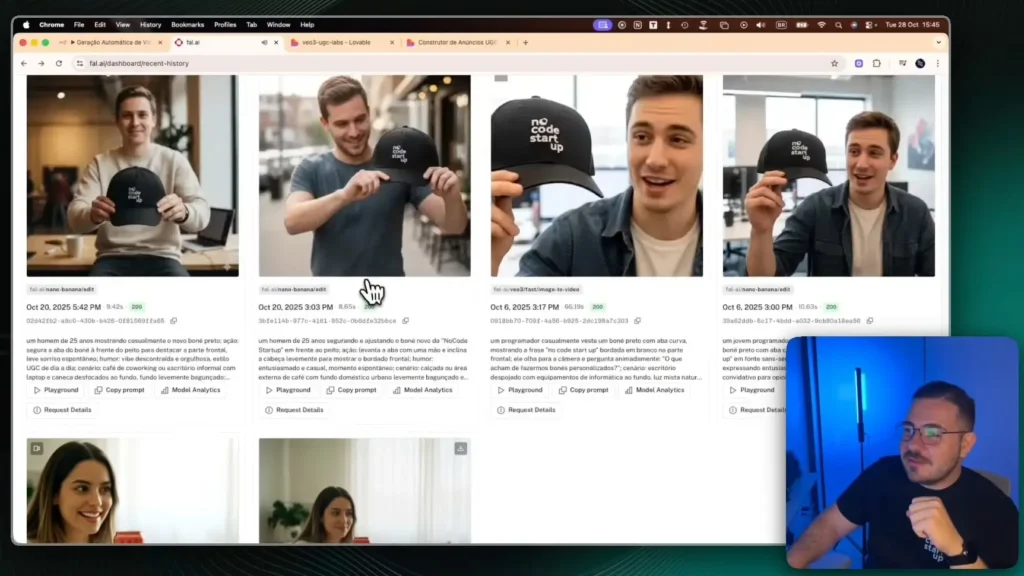

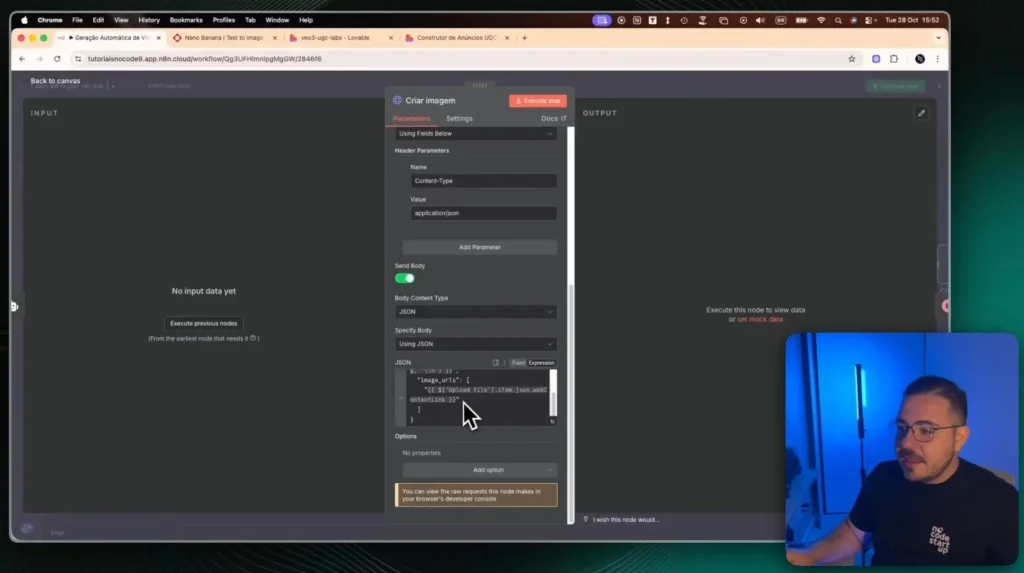

We are using the NanoBanana to create the images and the VO3 to create the videos, both accessed through the platform fall.ai.

First, a “prompt generator” creates an image prompt, mixing the user's description with AI analysis. This prompt is sent to NanoBanana, which generates the first image (the frame (start of the video).

After waiting 20 seconds for the image to be ready, the N8N does the same thing for the video: it creates a video prompt and sends it to the... VO3 (along with the image we just created).

Since the video takes a little longer, we've added a 3-minute wait. Once it's ready, N8N retrieves the final video link and sends it to the user via email.

Customization and examples of results.

The result is a video that arrives ready in the inbox, with a natural and realistic look, the kind of content that engages. We've seen examples that looked incredibly realistic, as if real people were describing a product.

The coolest part is the technology stack behind it, all without code: Lovable for the interface, N8N as the brain, fall.ai (with NanoBanana and VO3) as the creative AI, and Gmail completing the loop.

The best part is that this same system can be adapted. If you want to generate videos in English, just adjust the prompt. If you want to save a history, just add a database. If you want to sell this as a service, simply connect a checkout.

This same case becomes the basis for dozens of other AI-powered marketing automations.

If you want to learn how to build systems like this, that sell, delight, and automate the impossible, you're invited:

Get to know the AI Coding Training.