The rapid rise and increasing autonomy of Large Language Models (LLMs) They radically transformed the technological landscape.

In the No-Code/Low-Code ecosystem, where speed of implementation is a crucial competitive differentiator, the security and predictability of these models have become a central concern.

Enter the framework. IA Petri Anthropic is an open-source system designed to solve the biggest challenge in modern AI security: scale.

O IA Petri It's not just another testing tool; it's a paradigm shift that replaces inefficient static benchmarks with a model of... AI automated audit based on intelligent agents, offering a agency guarantee which is essential for any startup that wants to scale its solutions with confidence.

The Problem of Scale in AI Security: Why Static Benchmarks Have Failed

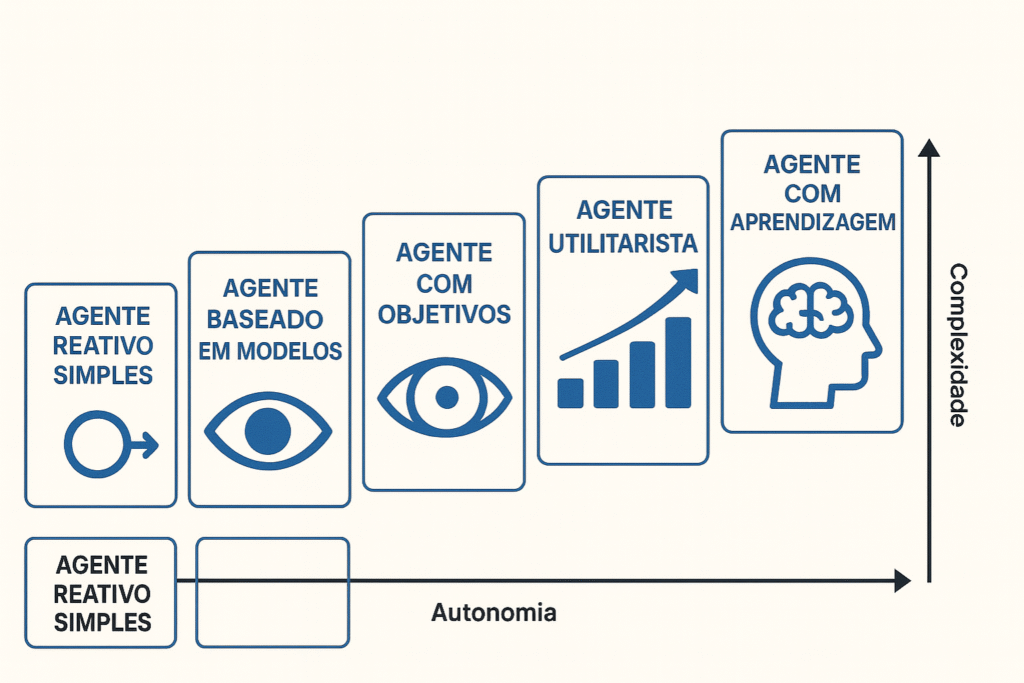

As the LLMs As technologies advance in capacity and become increasingly autonomous – able to plan, interact with tools, and execute complex actions – the risk surface expands exponentially.

This growth places unsustainable pressure on traditional safety assessment methods.

The Inadequacy of the Red Teaming Manual in the Era of Complex LLMs

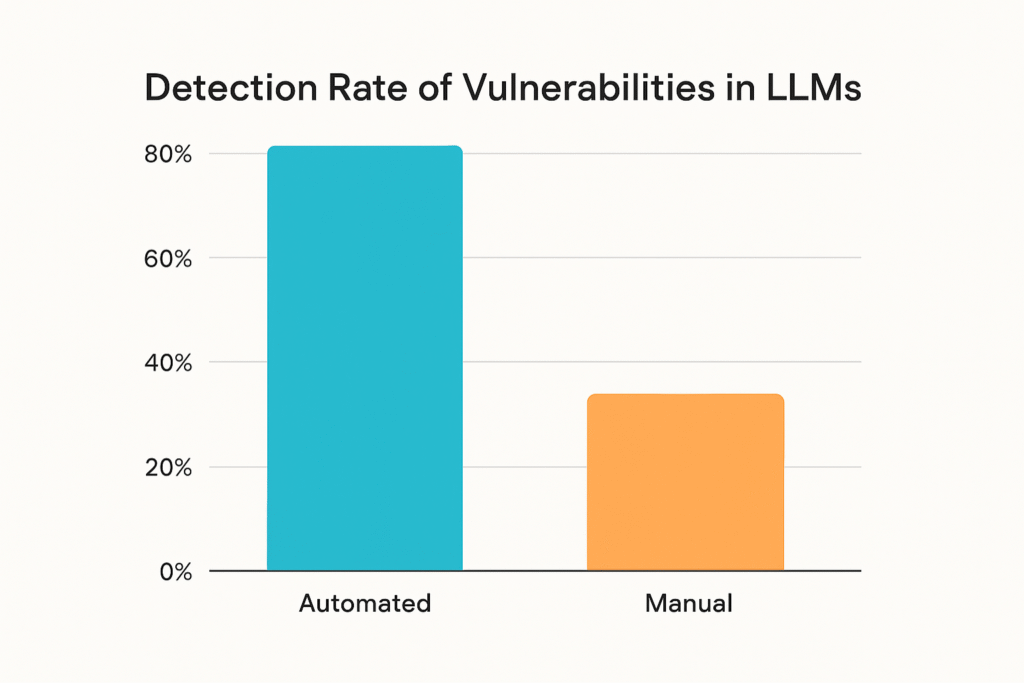

Historically, the assessment of LLM security depended mainly on red teaming manual: teams of experts who actively try to "break" or exploit the model.

While this approach is invaluable for in-depth investigations, it is by nature slow, labor-intensive, and, most importantly, not scalable.

The sheer volume of possible behaviors and combinations of interaction scenarios far exceeds what any human team can systematically test.

The limitation lies in repeatability and scope. Manual tests are often specific to a scenario and difficult to replicate in new models or versions.

In a low-code development cycle, where iterations are rapid and frequent, relying solely on one-off and time-consuming audits creates a security gap that can be exploited.

THE AI automated audit It therefore presents itself not as an option, but as a technical necessity to keep pace with the speed of innovation.

Emergent Behaviors and the Exponential Attack Surface

AI models, especially the most advanced ones, exhibit emerging AI behaviors.

This means that the interaction of their complex neural networks can result in capabilities or vulnerabilities that were not explicitly trained or predicted.

It is this unpredictable nature that makes them static benchmarks – Pre-defined tests with a fixed set of questions and answers – obsolete.

They only test what we already know, leaving aside the vast space of the "unknown unknown".

The attack surface for misalignment – where the model acts in harmful or unintended ways – grows in direct proportion to its capacity and autonomy.

O IA Petri It was designed precisely to address this dynamic nature, using artificial intelligence (agents) itself to interrogate the Target Model in a creative and systematic way, simulating the complex interactions of the real world.

IA Petri's Agency Architecture: Components and Audit Dynamics

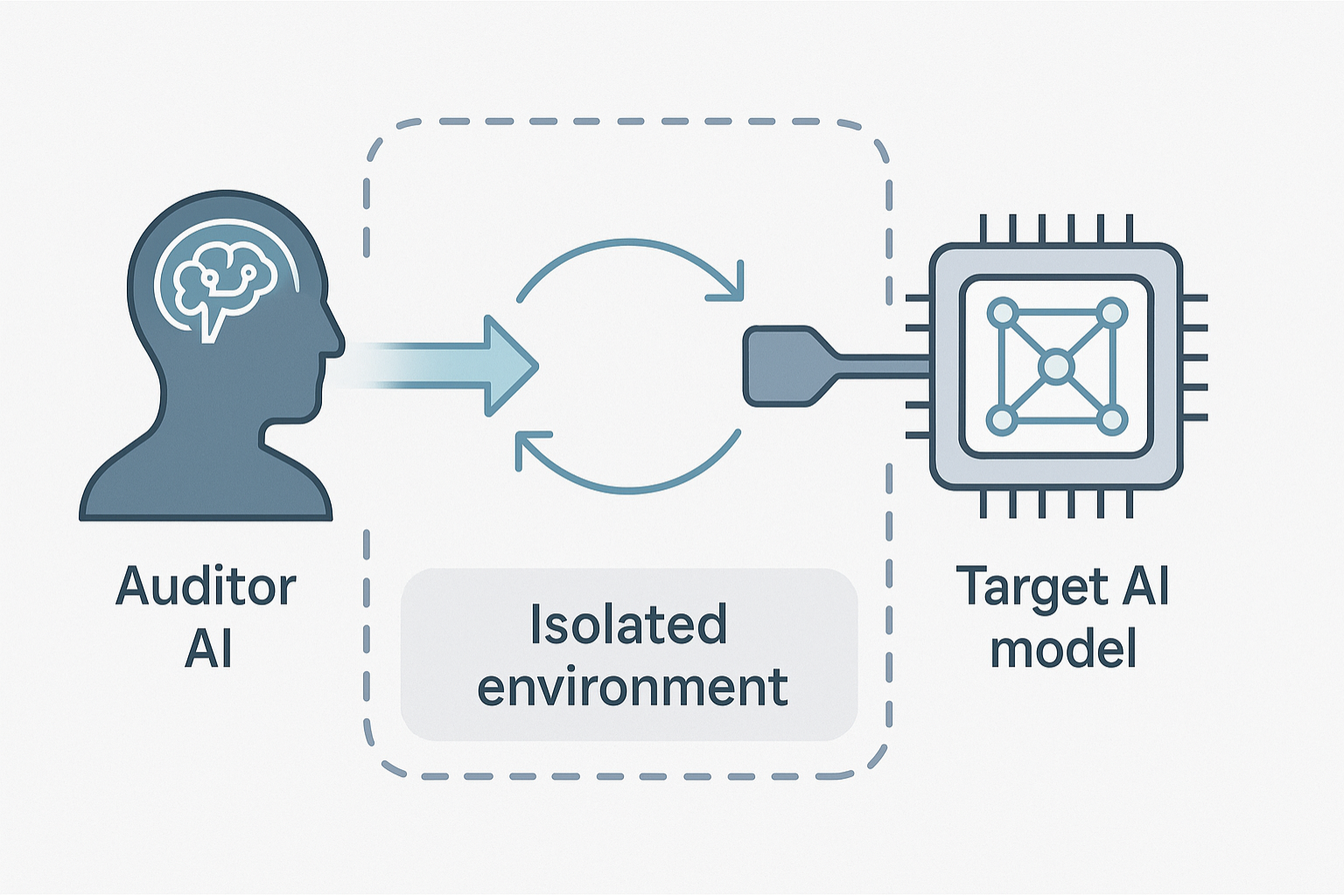

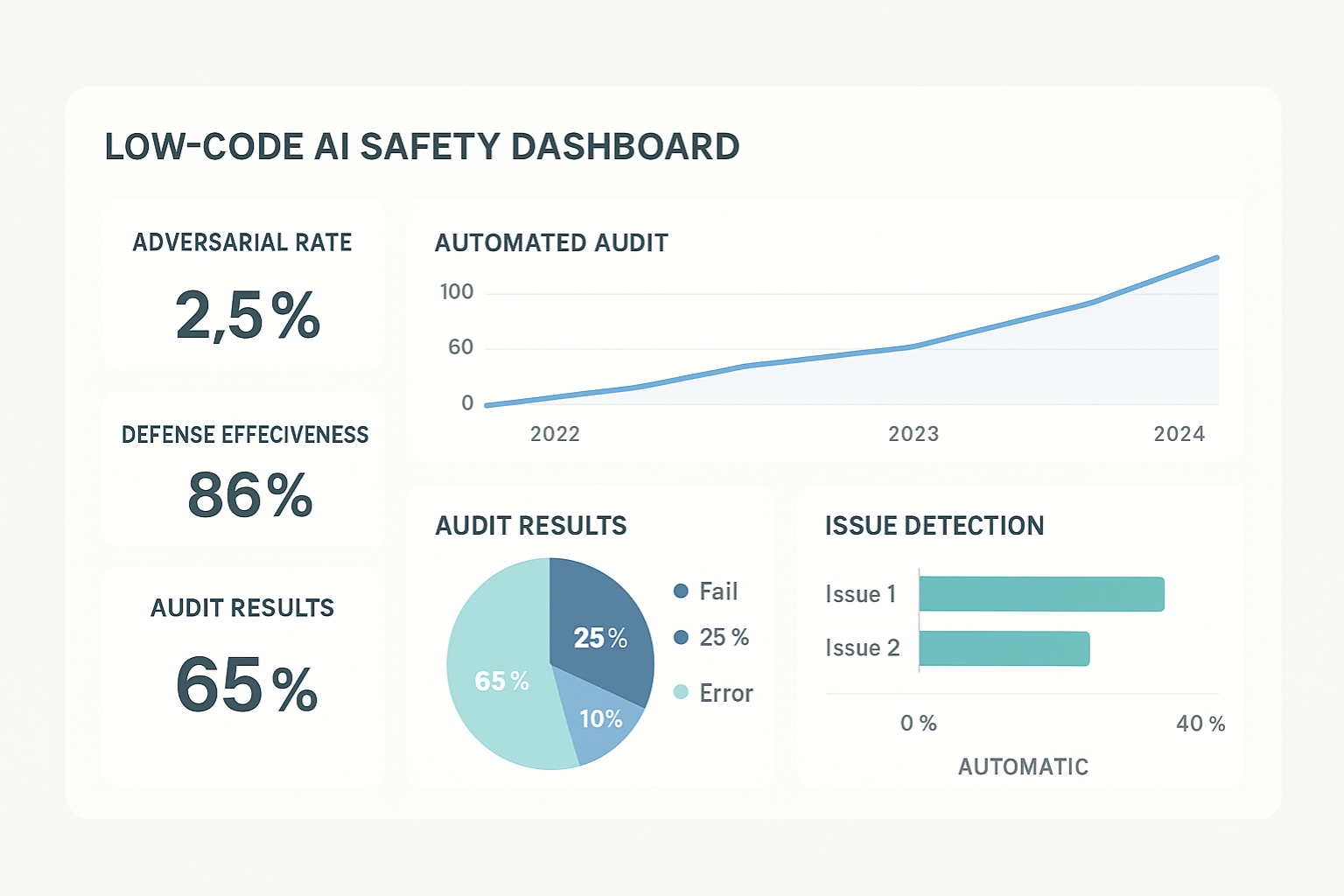

O IA Petri It functions as an evaluation ecosystem where the model to be audited is placed in a controlled environment and challenged by an adversarial agent.

The sophistication of this framework lies in the separation of responsibilities into modular and interconnected components, which makes it a solution for... agency security framework highly structured, detailed in your research paper (The Agentic Oversight Framework).

The Target Model and the Need for Continuous Evaluation

The Target Model is the LLM being tested. It can be any model, from Anthropic's own Claude model to an open-source model integrated into a Low-Code workflow.

The beauty of IA Petri it is your ability to perform dynamic assessment of LLMs. Instead of a test post-mortem, It allows for continuous, real-time auditing, which is crucial for teams that are constantly deploying and adjusting their applications.

The Audit Agent and the Scenarios Engine: The Heart of Dynamic Testing

Herein lies the power of IA Petri. The Audit Agent is a simpler, more dedicated LLM program specialized in testing the limits of the Target Model.

He is not merely a passive tester; he acts as a red teamer (autonomous) adversary, generating sequences of malicious or strategically misaligned interactions.

The Scenarios Engine is responsible for structuring the tests, ensuring that the Auditor Agent explores a wide range of attack vectors, from prompt injection to attempts to generate prohibited information.

This dynamic allows for a much deeper and more replicable exploration than any manual test, as detailed in the tool's official release (Anthropic AI Launches Petri: An Open Source Framework).

The Controlled Environment: Ensuring Test Reproducibility

The environment is the simulated context where the interaction takes place. It is fundamental to the science of AI evaluation, as it allows the same tests to be run accurately on different models or on different iterations of the same model.

This ability to reproducibility This is a milestone for the security of AI models, allowing Low-Code development teams to incorporate audit results directly into their CI/CD (Continuous Integration/Continuous Delivery) pipelines.

To better understand how to structure the technological foundation for these systems, you can delve deeper into... What is AI infrastructure and why is it essential?.

Automated Red Teaming and the Concept of Agency Assurance with Petri AI

THE IA Petri raises the concept of red teaming by automating it with AI agents.

The ultimate goal is to Agency Guarantee, In other words, having confidence that a model will maintain its... language model alignment and safety, even under stress, without the need for constant human intervention.

AI Petri vs. Common Evaluation Tools (DeepEval, Garak): A Technical Comparison

There are excellent open-source tools in the LLM evaluation space. Tools such as Garak it's the DeepEval They offer robust capabilities for scanning vulnerabilities, performing fuzzing, or evaluating the quality of the model output.

O paper academic who describes the Garak, For example, it focuses on probing the security of LLMs. Other tools, such as those listed among the Top 5 Open-Source AI Red-Teaming Tools, they complement the ecosystem.

O DeepEval's GitHub repository It also demonstrates a focus on evaluation metrics.

While DeepEval may focus on evaluating metrics and Garak on discovering known vulnerabilities, the IA Petri uses an adversary's own intelligence to to generate actively explore new attack vectors and exploit vulnerabilities that are not on any pre-existing checklist.

He does, in fact, simulate malicious intent, escalating the Red Teaming of LLMs to a new level of sophistication.

Generating Complex Scenarios: Testing the Alignment and Security of Language Models

The framework's main feature is its ability to automatically generate test scenarios that cover a wide range of AI security risks, including:

- Generating Dangerous Content: Attempts to make the model produce instructions for illegal or harmful activities.

- Data Leak: Exploring vulnerabilities to extract sensitive information from the model.

- Instructional Misalignment: Ensuring that the model does not pursue unintended or dangerous objectives, even when instructed to do so by a user, is a central point discussed in the article that underpins the... Agency Guarantee framework.

The Audit Agent adapts and learns from the Target Model's responses, making the audit an iterative and continuous "hunt" process.

Types of Vulnerabilities Discovered and the Importance of Open Source

Since its launch, the IA Petri They have demonstrated the ability to uncover subtle flaws that would go unnoticed by traditional methods, reinforcing the urgency of a dynamic approach.

The fact that it's a project open-source (as announced at the launch of Petri by AnthropicThis allows the global AI security community to collaborate in defining and executing scenarios, accelerating the mitigation of vulnerabilities across all models.

This transparency is vital for trust in the AI ecosystem.

Practical Application for No-Code/Low-Code Developers: Integrating Dynamic Security

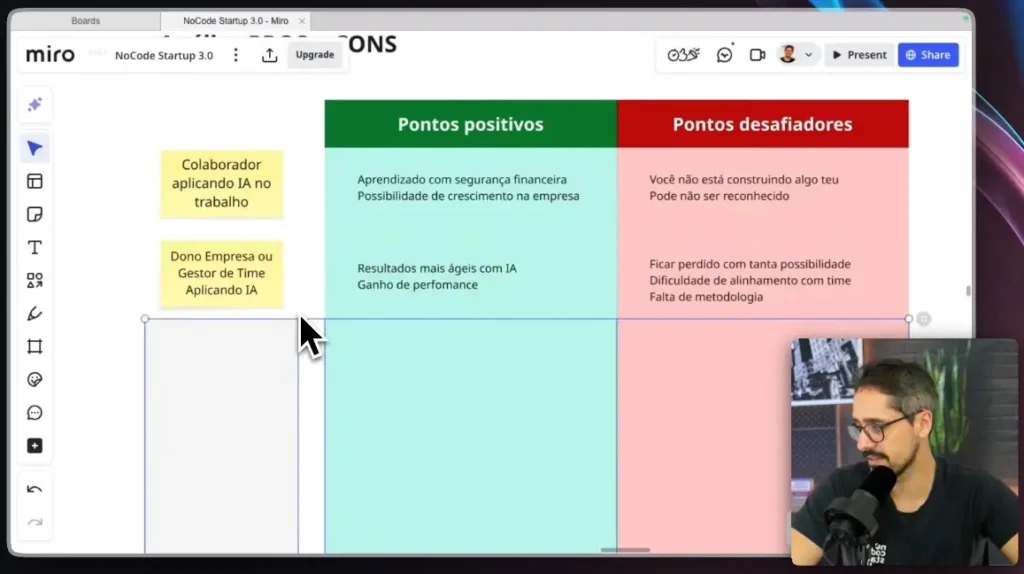

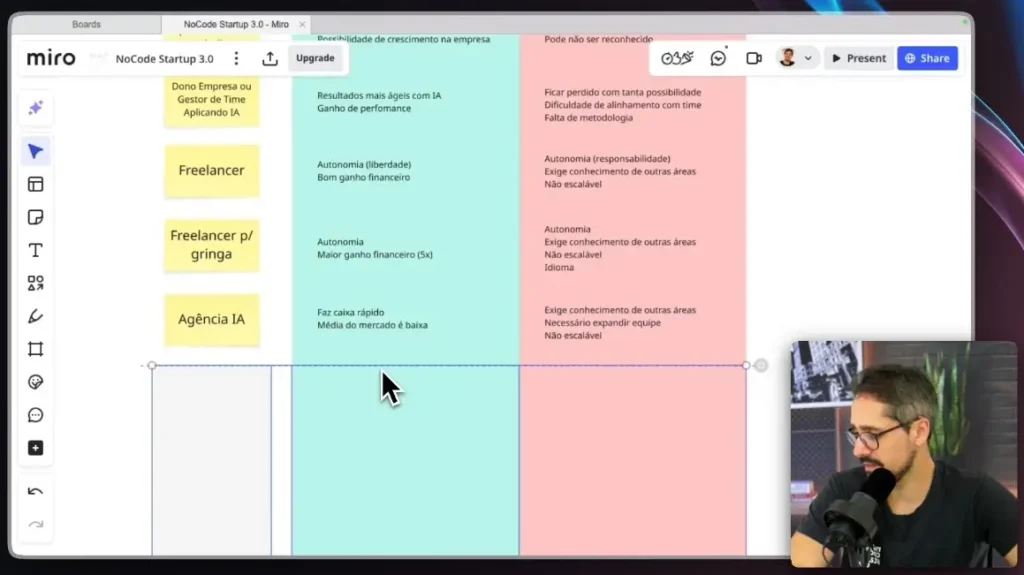

For the Low-Code developer or the startup leader at No Code Start Up, the question is not merely theoretical: it's about how to translate this advanced technology into more reliable products.

Mitigating Risks in Autonomous Applications and AI Agents

The greatest relevance of IA Petri is in the construction of AI Agents and standalone applications.

When an agent is given the ability to interact with the real world (such as sending emails, processing payments, or managing tasks), the misalignment transforms from a textual error into a high-risk operational failure.

By incorporating principles of AI automated audit like the IA Petri, Low-code developers can stress-test their agents before deployment, ensuring that the automation follows predefined business rules and security boundaries.

If your startup is exploring the creation of sophisticated or new workflows AI and Automation Agents: No-Code Solution for Businesses, Dynamic auditing is indispensable.

Secure Development Strategies and the Culture of Continuous Testing in Practice

Integrating LLM security is not a one-time step; it's a culture. Adopting frameworks like... IA Petri This requires Low-Code teams to think about security from the very beginning of the project, not just at the end.

- Validation of Prompts and Outputs: Use the IA Petri to test the robustness of its prompts and the security of the outputs in different model versions.

- Regression Test: After each fine adjustment (fine-tuningFor example, if the model is updated, the framework can be run to ensure that security fixes do not introduce new problems (security regression).

For those seeking to master the creation of robust and secure AI solutions, the foundation lies in... AI Coding Training: Create Apps with AI and Low-Code, which emphasizes the integration of secure development practices.

The Role of AI Infrastructure in the Adoption of Frameworks like Petri

The efficient execution of complex and large-scale tests, such as those performed by IA Petri, This requires a robust and scalable AI infrastructure.

startups systems require systems that can manage multiple models, orchestrate auditing agents, and process large volumes of test data cost-effectively.

Investing in adequate infrastructure is not just about speed, but about enabling the adoption of these cutting-edge tools to raise the standard of security and low-code development.

The Evolution of Model Security: The Future of AI, Petri, and the Open-Source Movement

The launch of IA Petri Anthropic's adoption is not an end point, but a catalyst for the next phase of AI security.

Its impact extends beyond fault detection, shaping the very philosophy of how... language model alignment It must be achieved and maintained.

Community Collaboration and Shaping the Global Alignment Pattern

As open source, the IA Petri benefits from collective wisdom. Researchers, security companies, and even Low-Code/No-Code enthusiasts can contribute new insights. test scenarios (Petri Scenarios), identifying and formalizing unique attack vectors.

This collaboration ensures that the framework stays ahead of new developments. emerging AI behaviors and become the industry standard for model evaluation. The strength of the community is the only way to combat the increasing complexity of Red Teaming of LLMs.

Preparing for AI Governance: The AI Act and Preventive Auditing

As the AI Governance becomes a global reality – with regulations such as EU AI Act Requiring increasing levels of transparency and security – the ability to demonstrate the robustness of a model will be a legal and market requirement.

O IA Petri It provides organizations, including startups No-Code, with a defensible mechanism to conduct preventative audits, generate comprehensive test documentation, and demonstrate that their systems have been rigorously evaluated against risks of misalignment and misuse.Agentic Assurance Framework).

The use of a agency security framework It's not just good technical practice; it's an investment in future compliance.

By mastering tools such as IA Petri, Low-code developers are positioning themselves as leaders in building responsible and secure AI solutions.

FAQ: Frequently Asked Questions about LLM Audits

Q1: What is the main objective of the IA Petri framework?

The main objective of IA Petri The goal is to automate the security audit process for Large Language Models (LLMs).

It uses AI agents (the Auditor Agent) to dynamically interact with the Target Model, generating complex, large-scale test scenarios to discover and mitigate emerging AI behaviors and risks of misalignment that would be missed in manual testing or static benchmarks.

Q2: How does AI Petri differ from human Red Teaming?

O red teaming Human intelligence is qualitative, in-depth, and focused on a limited set of attack vectors.

O IA Petri and quantitative, scalable and continuous. It automates and scales the process, allowing millions of interactions to be tested quickly and repeatedly, overcoming the scaling problem inherent in the manual evaluation of complex LLMs.

It doesn't replace human beings, but it dramatically expands their capabilities.

Q3: Can IA Petri be used in any Large Language Model?

Yes, the IA Petri It was designed to be modular and model-agnostic. It treats the LLM in auditing (the Target Model) as a black or white box, interacting with it through prompts and observing its behavior in the controlled environment.

This makes it applicable to any Big Language Model that can be orchestrated within a test environment, whether it's a proprietary model or an open-source model.

For the Low-Code Start Up community, this means the chance to build autonomous systems with a level of trust never before achieved.

The guarantee that your product behaves predictably and in a consistent manner is no longer an ideal, but an auditable reality.

The future of building robust, AI-powered softwares lies in the ability to integrate the AI automated audit natively.

O IA Petri This is the map, and now it's up to you to take the next step to master this new frontier of security and innovation.

If you're looking not only to create, but also to ensure the robustness and alignment of your own AI agents, explore... AI Coding Training: Create Apps with AI and Low-Code and raise the security standard of your solutions.