THE prompt engineering or prompt engineering Today, this is the key skill for extracting practical intelligence from generative models like GPT-4O. The better the instruction, the better the result: more context, less rework, and truly useful answers.

Mastering this subject expands creativity, accelerates digital product development, and opens up a competitive advantage. In this guide, you will understand the fundamentals, methodologies, and trends, with applicable examples and links that delve deeper into each topic.

Contents

What is Prompt Engineering?

THE prompt engineering It consists of designing carefully structured instructions to guide artificial intelligence toward accurate, ethical, and goal-aligned outputs.

In other words, it's the "conversational design" between humans and AI. The concept gained traction as companies realized the direct relationship between the clarity of the prompt and the quality of the delivery.

From simple chatbots, like the historic ELIZA, to multimodal systems, the evolution underscores the importance of best practices. Want an academic overview? The OpenAI official guide shows experiments of few-shot learning and chain-of-thought in detail

Linguistic and Cognitive Foundations

Language models respond to statistical patterns; therefore, each word carries semantic weight. Ambiguity, polysemy, and token order influence AI comprehension. To reduce noise:

Use specific terms instead of generic ones.

— State the expected language, format, and tone.

— Debt context in logical blocks (strategy chaining).

These precautions reduce vague responses, something proven by research from... Stanford HAI who analyzed the correlation between syntactic clarity and output accuracy.

Want to practice these techniques with zero code? A AI Agent and Automation Manager Training It offers guided exercises that start at the basics and progress to advanced projects.

Practical Methodologies for Constructing Prompts

Prompt Sandwich

The Prompt-Sandwich technique consists of structuring the prompt into three blocks: contextual introduction, clear examples of input and output, and the final instruction asking the model to follow the pattern.

This format helps the AI understand exactly the type of response desired, minimizing ambiguities and promoting consistency in delivery.

Chain‑of‑Thought Manifesto

This approach prompts the model to think in steps. By explicitly asking the AI to "reason aloud" or detail the steps before reaching a conclusion, the chances of accuracy are significantly increased – especially in logical and analytical tasks.

Google Research studies prove gains of up to 30% in accuracy with this technique.

Self-Assessment Criteria

Here, the prompt itself includes parameters for evaluating the generated response. Instructions such as "check for contradictions" or "evaluate clarity before finalizing" cause the model to perform a kind of internal review, delivering more reliable and refined outputs.

To see these methods in action within a mobile application, check out the case study on our website. FlutterFlow course, where each screen brings together reusable prompts integrated with the OpenAI API.

Essential Tools and Resources

In addition to OpenAI's Playground, tools such as PromptLayer They perform versioning and cost analysis per token. Programmers, on the other hand, find it in the library. LangChain a practical layer for composing pipelines complex.

If you prefer solutions no-code, platforms such as N8N They allow you to encapsulate instructions in clickable modules – a complete tutorial is available on our website. N8N Training.

It's also worth exploring open-source repositories on Hugging Face, This is where the community publishes prompts optimized for models like Llama 3 and Mistral. This exchange accelerates the learning curve and expands the repertoire.

Use Cases in Different Sectors

Customer SuccessPrompts that summarize tickets and suggest proactive actions.

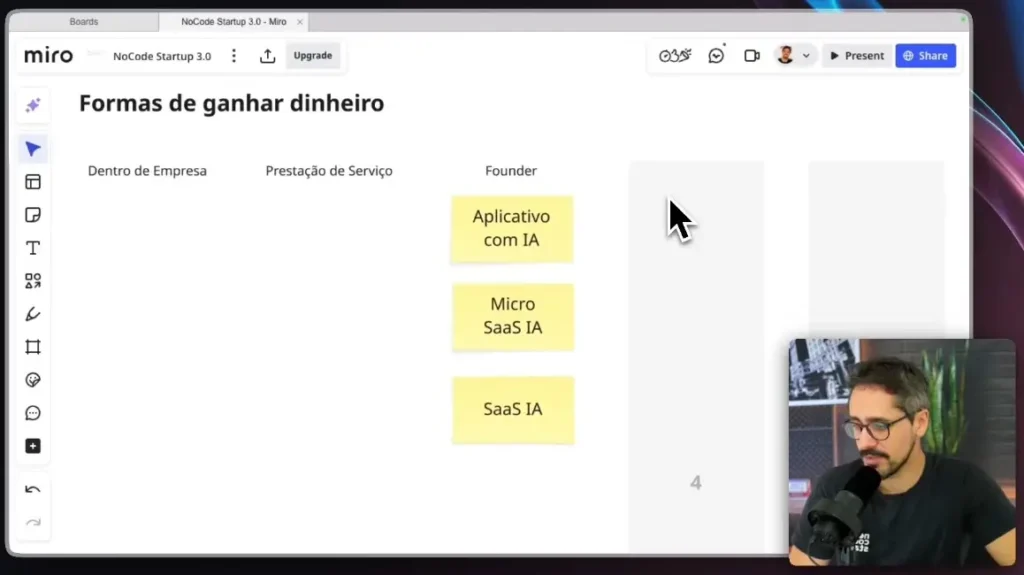

Marketing: generating targeted campaigns, exploring personas built via SaaS IA NoCode.

Health: symptom screening with human medical validation, following guidelines from European AI Act For responsible use.

EducationInstant feedback in writing, highlighting areas for improvement.

Notice that all scenarios begin with a refined instruction. That's where prompt engineering reveals its value.

Future Trends in Prompt Engineering

The horizon points to prompts multimodal capable of orchestrating text, image, and audio in a single request. In parallel, the concept of [missing word - likely "techniques" or "techniques"] emerges. prompt-programming, where the instruction is transformed into executable mini-code.

Architectures open-source as Mixtral They encourage communities to share standards, while regulations require transparency and mitigation of biases.

O study by Google Research It also indicates that dynamic prompts, adjusted in real time, will empower autonomous agents in complex tasks.

Practical Results with Prompt Engineering and Next Professional Steps

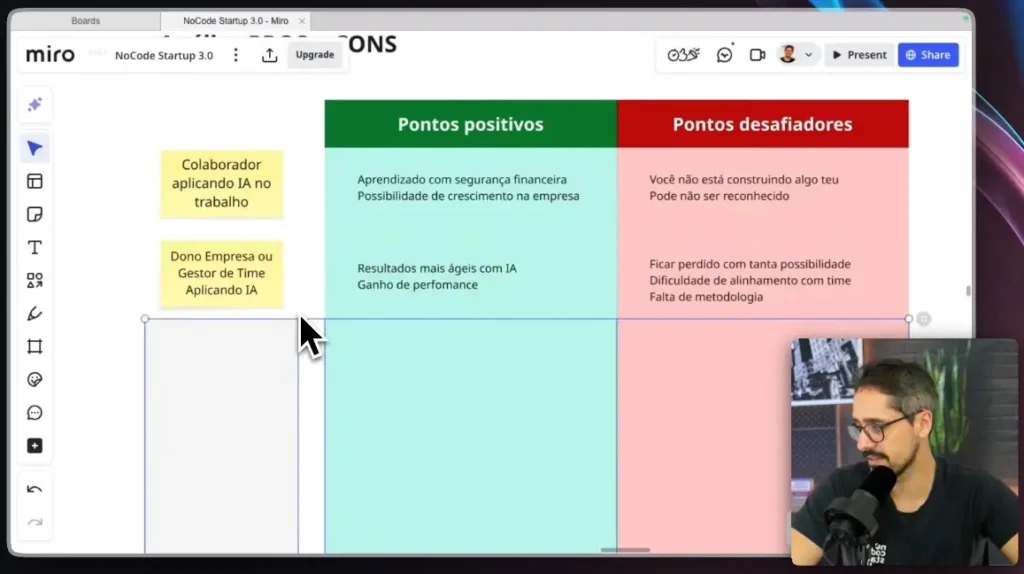

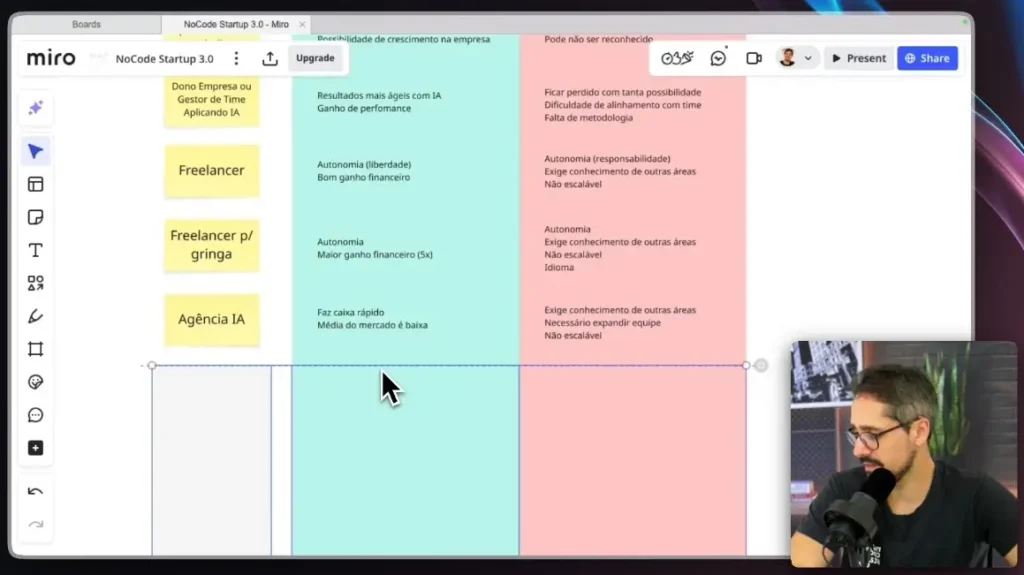

THE prompt engineering It has gone from being a technical detail to a strategic factor. Mastering linguistic principles, applying tested methodologies, and using the right tools multiplies productivity and innovation – whether you are a founder, freelancer, or intrapreneur.

Ready to take your skills to the next level? Discover... SaaS IA NoCode Training From No Code Start Up – an intensive program where you build, launch, and monetize products equipped with advanced prompts.