The advancement of language models has transformed the way we interact with technology, and the GLM 4.5 It emerges as an important milestone in this evolution.

Developed by the Zhipu AI team, this model has been gaining global recognition by offering a powerful combination of computational efficiency, structured reasoning, and advanced support for artificial intelligence agents.

For developers, companies, and AI enthusiasts, understanding what GLM 4.5 is and how it compares to other standards is crucial. LLMs It is essential to take full advantage of its features.

What is GLM 4.5 and why does it matter?

O GLM 4.5 It is a Mixture of Experts (MoE) type language model, with 355 billion total parameters and 32 billion active parameters per forward pass.

Its innovative architecture allows for the efficient use of computing resources without sacrificing performance in complex tasks.

The model is also available in lighter versions, such as the GLM 4.5-Air, optimized for cost-effectiveness.

Designed with a focus on reasoning tasks, code generation, and interaction with autonomous agents, GLM 4.5 stands out for its support for... hybrid way of thinking, which alternates between quick responses and in-depth reasoning on demand.

Technical characteristics of the GLM 4.5

The technical advantage of GLM 4.5 lies in its combination of optimizations to the MoE architecture and improvements to the training pipeline. Among the most relevant aspects are:

Intelligent and balanced routing

The model employs sigmoid gates and QK-Norm normalization to optimize routing between specialists, ensuring better stability and utilization of each specialized module.

Extended context capability

With support for up to 128,000 entry tokens, The GLM 4.5 is ideal for long documents, extensive code, and deep conversation histories. It is also capable of generating up to 96,000 output tokens.

Muon Optimizer and Grouped-Query Attention

These two advancements allow GLM 4.5 to maintain high computational performance even with the scalability of the model, benefiting both on-premises and cloud deployments.

GLM 4.5 Tools, APIs, and Integration

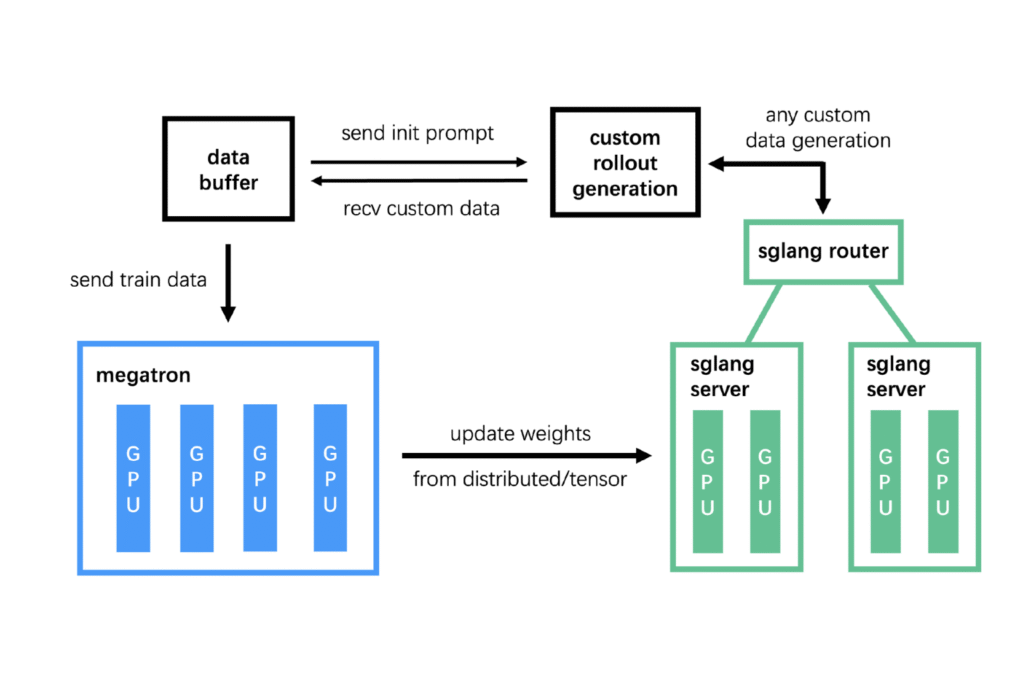

The Zhipu AI ecosystem facilitates access to GLM 4.5 through APIs compatible with the OpenAI standard, as well as SDKs in various languages. The model is also compatible with tools such as:

- vLLM and SGLang for local inference

- ModelScope and HuggingFace for use with open weights

- Environments with OpenAI SDK compatibility for easy migration of existing pipelines

To see examples of integration, visit the official documentation for GLM 4.5.

Real-world applications: where GLM 4.5 shines

GLM 4.5 was designed for scenarios where generic models face limitations. Among its applications are:

Software Engineering

With high performance in benchmarks such as SWE-bench Verified (64.2) and Terminal Bench (37.5), it positions itself as an excellent option for automating complex coding tasks.

Assistants and Independent Agents

In the tests TAU-bench and BrowseComp, GLM 4.5 outperformed models like Claude 4 and Qwen, proving to be effective in environments where interaction with external tools is essential.

Complex data analysis and reporting.

With its strong contextual capabilities, the model can synthesize extensive reports, generate insights, and analyze lengthy documents efficiently.

Comparison with GPT-4, Claude 3 and Mistral: performance versus cost

One of the most notable aspects of the GLM 4.5 is its significantly lower cost compared to models such as... GPT-4, Claude 3 Opus and Mistral Large, even though it offers comparable performance across various benchmarks.

For example, while the average cost of generating tokens with GPT-4 can exceed US$ 30 per million tokens generated, The GLM 4.5 operates with averages of US$ 2.2 per million output, with even more affordable options such as GLM 4.5-Air for only US$ 1.1.

In terms of performance:

- Claude 3 It excels in linguistic reasoning tasks, but GLM 4.5 comes close in mathematical reasoning and code execution.

- Mistral It excels in speed and local compilation, but doesn't reach the contextual depth of 128k tokens like GLM 4.5.

- GPT-4, Although robust, it demands a high price for performance that in many scenarios is matched by GLM 4.5 at a fraction of the cost.

This cost-effectiveness positions the GLM 4.5 as an excellent choice for startups, universities, and data teams looking to scale AI applications on a budget.

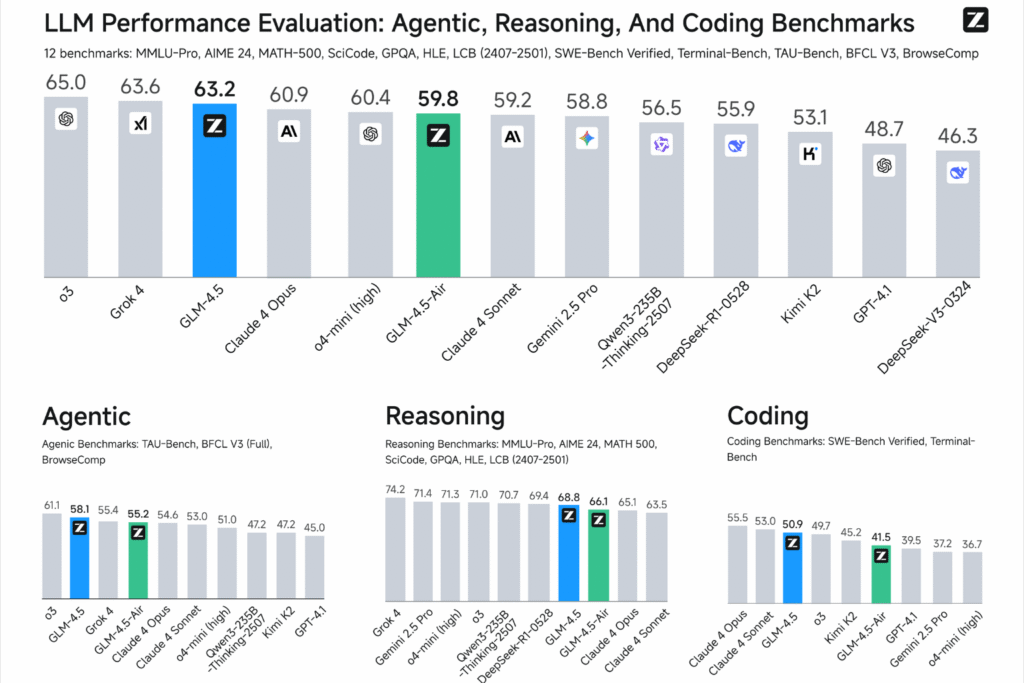

Performance comparison with other LLMs

GLM 4.5 not only competes with the big names in the market, but also surpasses them in several metrics. In terms of reasoning and execution of structured tasks, it achieved the following results:

- MMLU-Pro: 84.6

- AIME24: 91.0

- GPQA: 79.1

- LiveCodeBench: 72.9

Source: Official report from Zhipu AI

These numbers are clear indicators of a mature model, ready for large-scale commercial and academic use.

Future and trends for GLM 4.5

Zhipu AI's roadmap points to even greater expansion of the product line. GLM, with multimodal versions such as the GLM 4.5-V, which adds visual input (images and videos) to the equation.

This direction follows the trend of integrating text and images, which is essential for applications such as OCR, screenshot reading, and visual assistants.

Ultra-efficient versions are also expected, such as the GLM 4.5-AirX and free options like GLM 4.5-Flash, which democratize access to technology.

To keep up with these updates, it is recommended to monitor the official project website.

A model for those seeking efficiency with intelligence.

By combining sophisticated architecture, versatile integrations, and excellent practical performance, the GLM 4.5 It stands out as one of the most solid options in the LLM market.

Its focus on reasoning, agents, and operational efficiency makes it ideal for mission-critical applications and demanding business scenarios.

Explore more related content at Agent training course with OpenAI, Learn about integration in Make course (Integromat) and check out other options for AI and NoCode training programs.

For those seeking to explore the state-of-the-art in language models, GLM 4.5 is more than just an alternative—it's a step forward.