AI infrastructure is the set of hardware and software tools developed to create and run artificial intelligence applications, such as facial recognition and chatbots.

In recent years, its importance has increased significantly due to the growing demand for AI solutions, which are essential in sectors such as healthcare and finance.

This infrastructure not only supports the development of new technologies, but also promotes innovation by integrating AI with existing systems, enhancing efficiency and effectiveness.

Collaboration between technology companies and research institutions further strengthens this evolution, ensuring that AI infrastructure remains a central pillar in digital transformation.

Components of AI Infrastructure: Hardware and Software

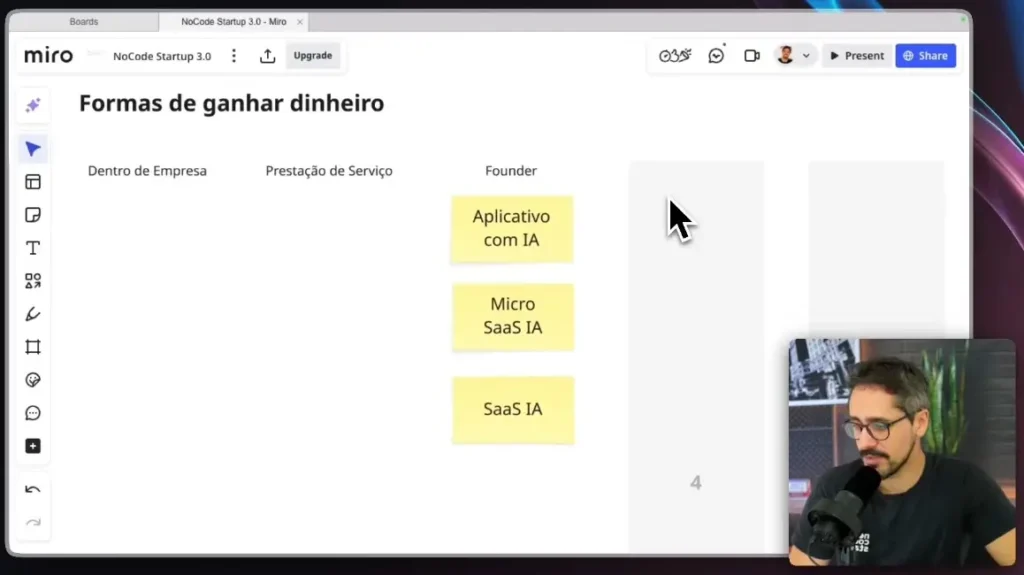

If you want to learn in practice how to assemble and optimize a AI infrastructure In addition, No Code Start-Up offers specialized training in platforms such as Bubble, FlutterFlow, N8N, and agents with OpenAI.

Learn how to structure your own SaaS with AI in a guided environment, with low cost and visual tools. Discover the SaaS AI training program from No Code Start-Up..

Required Hardware

For a robust AI infrastructure, prioritize components such as GPUs and TPUs This is crucial. GPUs, such as NVIDIA's RTX series, are fundamental for intensive parallel processing, enabling the efficient training of complex deep learning models.

TPUs, developed by Google, are designed to accelerate machine learning operations, especially in cloud environments.

Furthermore, high-performance computing (HPC) supports the processing of large volumes of data, which is essential for quick and accurate insights.

Required Software

On the software side, platforms of MLOps such as AWS SageMaker and Kubeflow They are essential for managing and orchestrating machine learning workflows.

Data center architectures, such as those from NVIDIA, are tailored to optimize AI infrastructure, combining cutting-edge hardware with security and networking solutions.

Integration between Hardware and Software

Effective integration between hardware and the software is vital for maximizing performance.

This includes coordination between high-capacity GPUs and MLOps platforms, ensuring that AI models are trained and deployed efficiently and securely.

This synergy is what truly allows AI infrastructure to effectively support the growing demands of today's market.

AI Infrastructure vs. Traditional IT Infrastructure

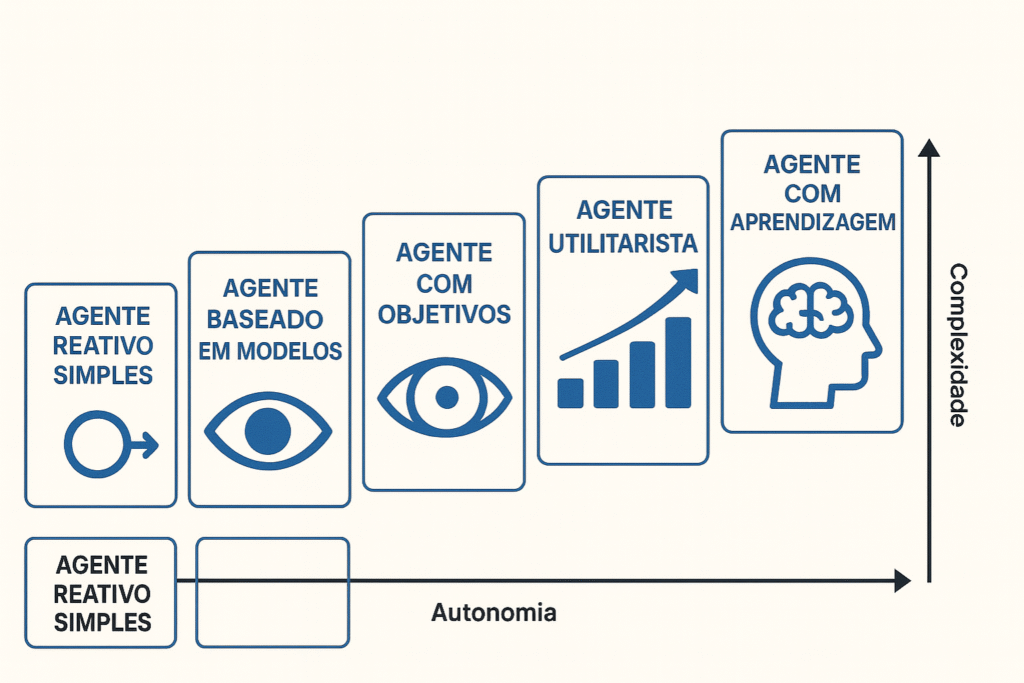

AI infrastructure and traditional IT differ significantly in terms of architecture and performance.

While traditional IT relies on manual configurations that often limit efficiency and security, AI infrastructure uses machine learning algorithms to automate processes, improving the response to problems in real time.

This results in superior performance and less downtime.

O parallel processing This is another crucial differentiating factor. GPUs, with their thousands of cores, allow AI to handle the growing volume of data more effectively than traditional CPUs.

“"Parallelism is not only beneficial, but essential for the evolution of AI," point out experts in Science and Data.

Furthermore, AI infrastructure demands Low-latency networks to ensure the efficiency of applications, especially in environments that operate in real time.

This contrasts with traditional IT, where latency can be a significant bottleneck. Thus, AI infrastructure not only overcomes traditional limitations but paves the way for continuous innovation.

Where to Host Your AI Infrastructure: Cloud or On-Premise?

For professionals who want to master the selection and implementation of cloud or on-premise environments with a focus on AI, No Code Start-Up offers practical training focused on automation and performance. Check out the AI management training here..

THE hosting AI infrastructure in the cloud It offers several significant advantages, such as increased operational efficiency and reduced costs.

By eliminating the need for proprietary hardware, companies can reduce operating expenses by up to 30%.

Furthermore, the cloud provides access to advanced technologies and scalability, with constant updates that improve security and competitiveness.

On the other hand, the on-premise AI infrastructure It offers greater control over data and security, but comes with scalable and unpredictable costs.

The need for initial investment in hardware and the complexity of implementation can be challenging.

Furthermore, scalability is limited, requiring significant investments for expansion.

When choosing between cloud and on-premise solutions, factors such as cost, scalability, and control are crucial.

The AI infrastructure market is projected to grow from US$68.46 billion in 2024 to US$171.21 billion in 2029, indicating a continued trend towards cloud solutions due to their flexibility and continuous innovation.

How Much Does it Cost to Implement a Scalable AI Infrastructure?

The cost of implement an AI infrastructure In medium-sized companies, the variation can be widespread.

Factors such as the complexity of the AI agent, the desired functionalities, and the need for integration with existing systems play a crucial role in the total costs.

For example, while a AI platform You can start with packages of R$ 60/month, more robust solutions can exceed R$ 1,050/month.

Scalability It is essential to ensure that these systems can grow as demand increases, avoiding bottlenecks and maintaining operational efficiency.

Scalability can be either vertical, increasing the capacity of a single server, or horizontal, adding more machines to the system.

Both methods are crucial to ensuring that AI models can process large volumes of data without compromising performance.

In a medium-sized project, setting up the necessary hardware, such as CPUs and GPUs, can cost between R$ 3,000 and R$ 20,000 for the GPUs alone.

Properly planning these costs and considering scalability is fundamental to the long-term success of any AI implementation.

Best Practices for MLOps and Security in AI Infrastructure

Want to learn how to apply MLOps practically and without relying on technical teams?

No Code Start-Up offers courses that show how to integrate tools like Make, Dify, and Xano to build secure and scalable workflows. See our AI Training Programs.

In AI infrastructure, the CI/CD pipeline automation It is essential to ensure the efficiency and quality of the models.

Tools like Airflow and Kubeflow make it easy to create consistent and reproducible workflows, from data preparation to... unfold of the models.

Furthermore, continuous integration and continuous delivery (CI/CD) aids in the validation and automated testing of models, enabling a more agile and frequent development cycle.

Data and model security is a critical concern in AI infrastructure. Using third-party solutions, such as... DataSunrise, This can strengthen security and compliance by protecting sensitive data and implementing access controls.

Furthermore, continuous monitoring is essential for maintenance, allowing adjustments based on changes in performance and user behavior, thus ensuring that the infrastructure effectively meets user needs.

The Future of AI Infrastructure: Trends and Innovations

Expected Technological Advances

In the coming years, AI infrastructure is expected to evolve rapidly with the incorporation of cutting-edge technologies such as computing. quantum, which promises to revolutionize large-scale data processing capabilities.

Experts believe that these innovations will enable more sophisticated and efficient solutions, expanding the reach of AI in various applications.

Impact of AI in Different Sectors

The impact of AI is expanding in sectors such as healthcare, finance, and manufacturing. In healthcare, for example, AI is helping with the early diagnosis of diseases and the personalization of treatments.

In the financial sector, automation is transforming everything from risk analysis to the personalization of customer services, while in manufacturing, AI is optimizing production processes and predictive maintenance.

The Role of Infrastructure in Continuous Innovation

AI infrastructure plays a crucial role in supporting continuous innovation, providing the necessary foundation for the development of advanced solutions.

According to a recent study, the integration of MLOps practices Security will become increasingly important to ensure the sustainability and efficiency of AI projects.

These elements are fundamental to maintaining a robust infrastructure that is adaptable to future demands.

Final Considerations on Machine Learning Infrastructure

Throughout this article, we explore the main pillars of AI infrastructure, highlighting how it supports everything from the simplest models to complex machine learning architectures.

Now, if you want to go beyond theory and apply this knowledge in a practical way, a No Code Start-Up It offers comprehensive training in AI, automation, intelligent agents, and much more.

Want to create your own AI infrastructure (without having to code from scratch)?

In NoCode StartUp's SaaS AI Training, In this course, you'll learn how to connect tools like Supabase, n8n, and OpenAI to build a complete, scalable, and secure applied AI foundation.