The massive introduction of Artificial Intelligence signals the most critical inflection point of the decade, transforming the physics of digital conflict.

We are not just facing new tools, but experiencing the consolidation of... Cybersecurity in the age of generative AI., where the speed of the machine begins to dictate organizational survival.

As pointed out by Global report from Research and Markets, AI will be the central pillar of defense strategies by 2031, marking the definitive transition from curiosity to systemic integration.

For technology professionals and No-Code developers, mastering this landscape is the only way to protect assets and reputation against threats that evolve in real time.

The New Physics of Digital Conflict: Understanding Cybersecurity in the Age of Generative AI

The great revolution brought about by Generative Artificial Intelligence in the field of information security lies not only in the sophistication of attacks, but also in the drastic reduction of entry barriers for cybercrime.

In the past, carrying out a complex attack required years of experience in programming and cryptography.

Today, the Cybersecurity in the age of generative AI. faces opponents who use Language Models (LLMs) Modified to write malware, create perfect phishing emails, and automate hacks.

The Democratization of Cybercrime and the Reduction of the Barrier to Entry

The emergence of tools known in the digital underworld as WormGPT and FraudGPT This perfectly illustrates this new scenario.

These are "unleashed" versions of popular programming language models, specifically trained with malware data and vulnerability exploitation techniques.

This allows malicious actors with little or no technical knowledge to carry out attacks that were previously the exclusive domain of elite hackers.

This "commoditization" of the attack means that small and medium-sized businesses are now targeted by automated campaigns.

Critical sectors, such as banking, are already feeling this pressure, experiencing what experts call a... an asymmetrical battle between banking AI and cybercriminals' AI, where the defense needs to always be one step ahead.

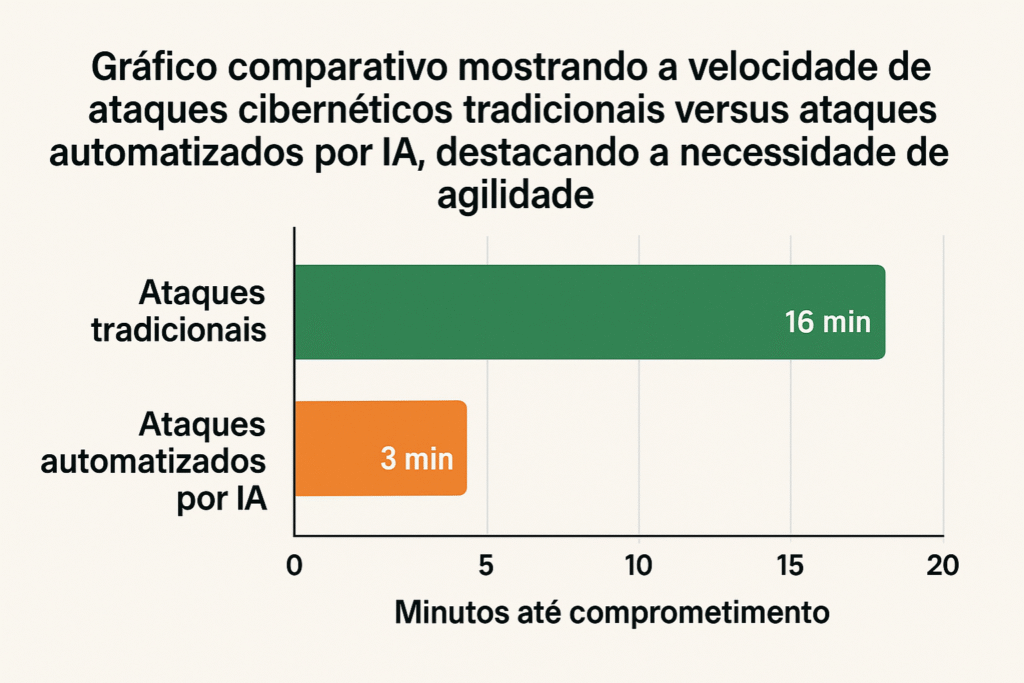

Machine Speed vs. Human Speed

Another crucial factor is the time asymmetry. While a human security team might take minutes or hours to triage an alert, an AI-based attack system operates in milliseconds.

The battle is now being fought at machine speed.

Therefore, modern defense cannot rely solely on manual reactions. It is necessary to integrate solutions that use AI itself to combat AI, creating digital immunity systems that learn autonomously.

Understanding what AI infrastructure is And how it sustains these defenses becomes foundational knowledge for any technology manager who wants to keep their operation resilient.

The 4 Main Threats Amplified by Generative AI

To protect your organization, it's vital to dissect the tactics that are being empowered by technology.

Cybersecurity in the age of generative AI is not just about new code, but about psychological manipulation on a large scale.

Social Engineering on an Industrial Scale (Phishing 2.0)

Traditional phishing was identifiable by grammatical errors. Generative AI has eliminated these flaws.

Today, attackers can generate emails of spear-phishing Highly personalized, mimicking the victim's tone of voice and vocabulary.

This ability for mass customization makes social engineering extremely difficult to detect using traditional filters.

Deepfakes and the Digital Identity Crisis

Perhaps the most visible face of Cybersecurity in the age of generative AI. be it the use of deepfakes.

The financial and legal sectors have been on high alert. Recently, the National Council of Justice (CNJ) had to intervene, establishing strict rules for the use of technology aiming to mitigate the risks of procedural and identity fraud.

Identity verification now needs to evolve to cryptographic proof-of-life and rigorous multi-factor authentication (MFA), as trust in human senses ("seeing is believing") has been broken.

Shadow AI and the Silent Leak of Corporate Data

The concept of “Shadow AI”This occurs when employees enter confidential data into public AI tools.

This information could then become part of the training for public models, creating leaks of intellectual property.

Implement AI Agent and Automation solutions Working within a controlled and company-sanctioned environment is the most effective way to mitigate this risk, offering employees the tools they want, but with the necessary governance.

Data Poisoning and Prompt Injection

In addition to protecting outgoing data, we must also be concerned with the integrity of incoming data.

Attacks of “Data Poisoning”These methods aim to corrupt AI models during their training. Prompt Injection, on the other hand, manipulates a model's output through malicious commands.

Defense Strategies: How to Build a Digital Fortress

The defensive posture must shift from "perimeter blocking" to "continuous resilience.".

Cybersecurity in the age of generative AI requires an approach that combines technology, processes, and regulatory compliance.

Strict Adoption of the Zero Trust Model

The new mantra is "never trust, always verify." Zero Trust architecture assumes that breach is inevitable. No identity should have implicit access to network resources.

In practice, this means network segmentation and continuous verification, blocking anomalous behavior even after initial authentication.

AI Governance and Compliance (ISO 42001 and CNJ Standards)

AI implementation cannot be haphazard. In addition to international frameworks such as... ISO/IEC 42001 (AI Management System), The national landscape is also making progress in regulation.

THE CNJ Resolution No. 615/2025, For example, it defines clear guidelines for governance, auditability, and security in the use of generative AI by the judiciary, serving as a model for other regulated sectors.

Mature companies are establishing ethics committees to ensure that the use of AI complies with these new legal and technical requirements.

The No-Code Professional as a Security Agent

In Cybersecurity in the age of generative AI., The citizen developer plays a leading role.

When you create automations and applications that process business data, you are building critical infrastructure.

Safety by Design in Visual Development

Modern platforms have robust features, but they need to be configured correctly.

This includes defining privacy rules in the database (Row Level Security) and securely managing API keys.

The lack of knowledge of these practices is the main vulnerability in Low-Code projects.

The Importance of Continuous Training in AI Coding

Simply knowing how to drag and drop components isn't enough. Understanding the logic behind integrating artificial intelligence APIs securely is crucial.

THE AI Coding training from No Code Startup It prepares professionals for this challenge, teaching them how to design scalable solutions that respect best practices in security and privacy from day one.

Frequently Asked Questions about Cybersecurity in the Age of Generative AI

Will generative AI replace cybersecurity professionals?

No. AI will function as a force multiplier. Reports like the one from ResearchAndMarkets These findings indicate that the demand for qualified professionals to manage these complex tools and strategies will continue to grow.

How can I protect my company against deepfakes in online meetings?

Establish "out-of-band" verification protocols. If there are urgent financial requests via video, confirm through another channel. Raise awareness about the tactics used in Bank fraud with AI It's the first line of defense.

Which AI tools are safe for corporate use?

Opt for "Enterprise" versions that contractually guarantee data privacy, preventing your information from training public models.

What is "Shadow AI"?

It is the unmonitored use of AI by employees. This creates risks of data leaks and violations of regulations such as... CNJ Resolution No. 615, which requires strict control over the technological tools used.

Is No-Code safe?

Yes, if implemented correctly. Security depends on the correct configuration of permissions and authentication, central themes in any... professional development training.

The Way Forward: Vigilance and Adaptation

THE Cybersecurity in the age of generative AI. It is not a final destination, but an ongoing journey. The tools that innovate are the same ones that demand caution.

The answer lies in in-depth technical education, robust governance based on international standards, and choosing partners who prioritize safety.

For companies, investing in AI-based defense is mandatory. For professionals, raising the bar of knowledge is the only way to remain relevant and secure.

The future belongs to those who build intelligently and protect rigorously.

Master the Creation of Secure Software with AI

Don't wait until it's too late to learn. If you want to be at the forefront of development and ensure your applications are robust and secure, learn more about... AI Coding training from No Code Startup.

Learn how to create advanced softwares with Artificial Intelligence and Low-Code, applying best practices in security and governance from the very first line of logic.