Over the last five years, Hugging Face evolved from a chatbot launched in 2016 into a hub A collaborative approach that brings together pre-trained models, libraries, and AI apps; it is the fastest and most cost-effective way to validate NLP solutions and bring them to market.

Thanks to the vibrant community, detailed documentation, and native integration with PyTorch, TensorFlow and JAX, Hugging Face has become the go-to platform for rapidly adopting AI; in this guide, you'll understand what it is, how to use it, how much it costs, and the quickest way to put pre-trained models into production without complications.

Pro Tip: If your goal is to master AI without relying entirely on code, check out our... AI Agent and Automation Manager Training – In it, we show how to connect Hugging Face templates to no-code tools like Make, Bubble, and FlutterFlow.

What is the Hugging Face – and why does every modern NLP project involve it?

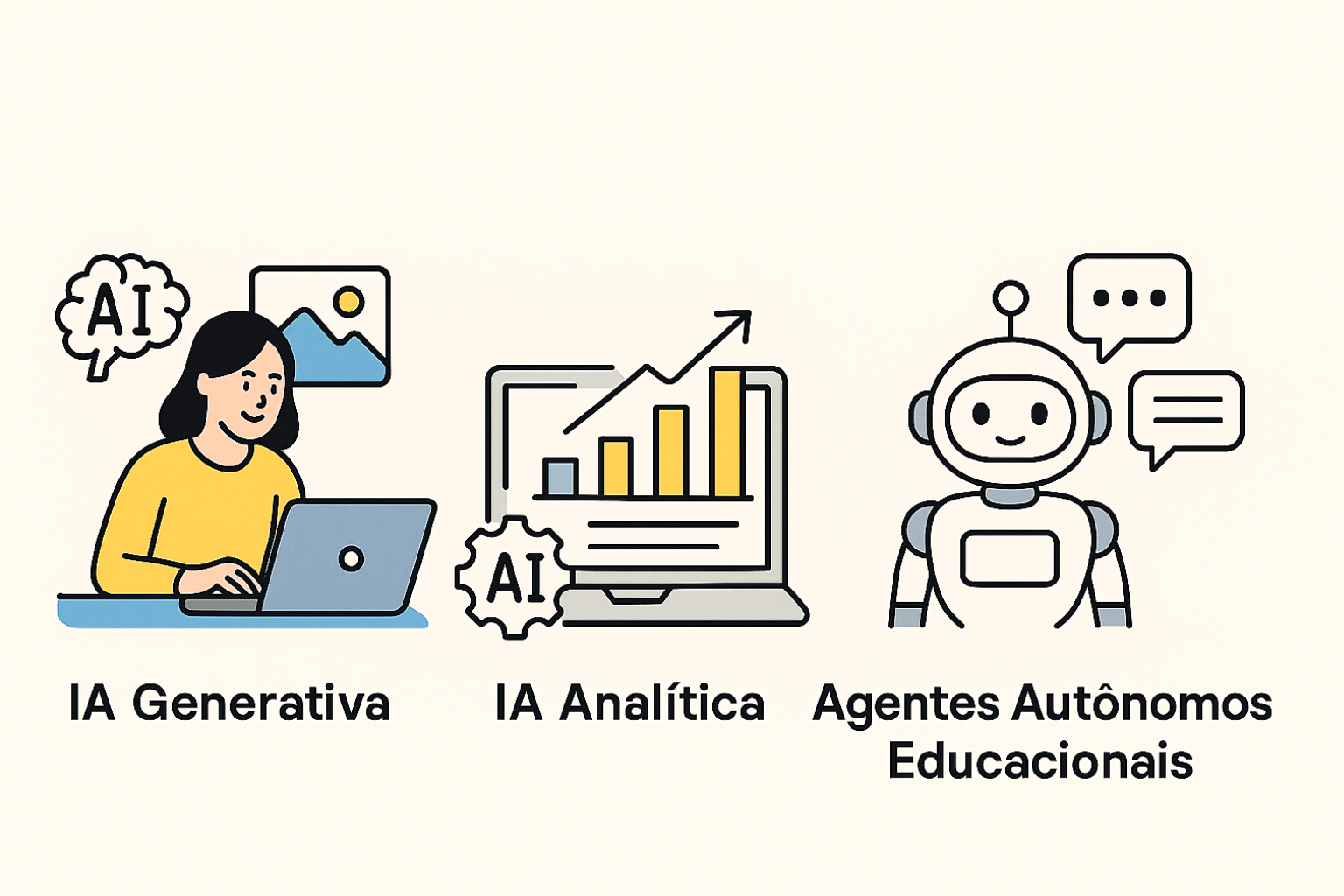

In essence, the Hugging Face It is a collaborative repository. open-source where researchers and companies publish pre-trained models for tasks involving language, vision, and, more recently, multimodality. However, limiting oneself to this definition would be unfair, as the platform incorporates three key components:

- Hugging Face Hub – a “GitHub for AI” that versions models, datasets and apps interactive, called Spaces.

- Transformers Library – a Python API that exposes thousands of models state-of-the-art with just a few lines of code, compatible with PyTorch, TensorFlow, and JAX.

- Auxiliary tools – such as datasets (data ingestion), diffusers (diffusion models for image generation), and evaluate (standardized metrics).

In this way, developers can explore the repository, download trained weights, and adjust... hyperparameters on laptops and publish interactive demos without leaving the ecosystem.

Consequently, the development cycle and feedback It becomes much shorter, which is fundamental in scenarios of MVP prototyping – a common pain point for our readers in the Persona range Founder.

Main products and libraries (Transformers, Diffusers & Co.)

Next, we delve into the pillars that bring Hugging Face to life. Notice how each component was designed to cover a specific stage of the AI journey.

Transformers

Initially created by Thomas Wolff, the library Transformers It abstracts the use of architectures such as BERT, RoBERTa, GPT-2, T5, BLOOM, and Llama.

The package includes tokenizers efficient model classes, headers for supervised tasks, and even ready-made pipelines (pipeline(“text-classification”)).

As a result, complex tasks become functions of four or five lines, speeding up the process. time-to-market.

Datasets

With datasets, uploading 100 GB of text or audio becomes trivial. The library streams files in chunks, It performs intelligent caching and allows for parallel transformations (map, filter). For those who want to train autoregressive models or evaluate them quickly, this is the natural choice.

Diffusers

The generative AI revolution is not limited to text. With diffusers, Any developer can try it. Stable Diffusion, ControlNet and other diffusion models. The API is consistent with transformers, and the Hugging Face team maintains weekly updates.

Gradio & Spaces

O Gradio It became synonymous with quick demos. It created an interface, passed the model, gave... deploy – There you go, a [person] was born. Space public.

For startups, it's a chance to show proof of concept to investors without spending hours setting it up. front-end.

If you want to learn how to create Visual MVPs that consume Hugging Face APIs, see our FlutterFlow Course and integrate AI into apps mobile devices without writing Swift or Kotlin.

Is Hugging Face paid? Clarifying myths about costs.

Many beginners ask if "Hugging Face is paid". The short answer: There is a robust free plan., ...but also subscription models for corporate needs.

Free: includes pull/push Unlimited public repositories, creation of up to three free Spaces (60 min of CPU/day), and unrestricted use of the transformers library.

Pro & Enterprise: They add private repositories, larger GPU quotas, auto-scaling for inference and dedicated support.

Regulated companies, such as those in the financial sector, can still hire a on-premises deployment to keep sensitive data within the network.

Therefore, those who are validating ideas or studying individually will hardly need to spend any money.

It only makes sense to migrate to a [system/method] when inference traffic grows. paid plan – something that typically coincides with market traction.

How to start using the Hugging Face in practice

Following piecemeal tutorials often leads to frustration. That's why we've prepared a... unique itinerary which covers from the first pip install up to the deploy from a Space. It's the only list we'll use in this article, organized in logical order:

- Create an account in https://huggingface.co and configure your token Access Tokens (Settings ▸ Access Tokens).

- Install key libraries: pip install transformers datasets gradio.

- Do the pull of a model – for example, bert-base-uncased – with from transformers import pipeline.

- Rode local inference:pipe = pipeline(“sentiment-analysis”); pipe(“I love No Code Start Up!”). Observe the response in milliseconds.

- Publish a Space with Gradio: create app.py, declare the interface and push via huggingface-cli. In minutes you'll have a public link to share.

After completing these steps, you will be able to:

• Adjust models with fine-tuning

• Integrate the REST API into your Bubble application.

• Secure inference via private API keys

Integration with No-Code Tools and AI Agents

One of the distinguishing features of Hugging Face is the ease of integrating it into tools without code. For example, in N8N You can receive text via Webhook, send it to the classification pipeline, and return analyzed tags in Google spreadsheets – all without writing to servers.

Already Bubble, The API Plugin Connector imports the model endpoint and exposes the inference in a drag-and-drop workflow.

If you want to explore these flows in more detail, we recommend our... Make Course (Integromat) and the SaaS IA NoCode Training, where we create end-to-end projects, including authentication, sensitive data storage, and usage metrics.